- Apr 20, 2018

- 291

- 478

Hey all, this is a guide I have wanted to make for a long time. I have learned so much about AI art while creating my game and figured it was time to share the knowledge.

Disclaimer: This guide is OPINIONATED! That means, this is how I make AI art. This guide is not "the best way to make AI art, period." There are many MANY AI tools out there and this guide covers only a very small number of them. My process is not perfect.

Hardware Requirements:

No hardware? No problem:

GPU Buying Guide:

Installation and Setup:

Now, you will get a window like this:

Understanding Models

Now, the most important part of AI generation: selecting a model. What is a model? I will spare you the technical details, most of which I don't understand either. Here's what you need to know about models:

Your base model is the most important thing in determining how your images will look. Here are some links to some example models (note, there are thousands and thousands of models available.)

Anime Models

Realism Models

Generating Your First Image

Alright, with all that new knowledge in your head, I will provide a recommended model for the remainder of this tutorial.

We will use

To download this, you will require an account on Civitai. Civitai is the primary space in which users in the AI community share models. Create an account and then continue on with this tutorial.

After you've created an account, to install this model, right-click here, and click 'Copy Link'

Now, go back to Invoke and click here:

Then, paste the link here, and click Install:

Most models are around 6gb, however Flux is around 30gb.

When it is done, you will see it here:

Now go back to the canvas by clicking here:

You will see the model has been automatically selected for you. But if you chose to install other models too, you can select the model here:

Now, enter these prompts:

And click 'Invoke'

Congratulations You have made your first image:

Now, you can create great AI art using only what you've seen so far and you're free to stop and experiment here. However, this is only the beginning of what you can do with AI.

In part 2, I will start to get into more tools and options you have available.

Disclaimer: This guide is OPINIONATED! That means, this is how I make AI art. This guide is not "the best way to make AI art, period." There are many MANY AI tools out there and this guide covers only a very small number of them. My process is not perfect.

Hardware Requirements:

- The most important spec in your PC when creating AI art is your GPU's VRAM. It really doesn't matter how old your GPU is (though newer ones will be faster), the limiting factor on what you can and cannot do with AI is almost always going to be your GPU's VRAM.

- This guide may work with as little as 4gb of VRAM, but in general, it is recommended that you have at least 12gb, with 16gb being preferred.

No hardware? No problem:

- If you do not have a good GPU, or just want to try some things out before buying one, the primary tool that I use in this tutorial offers a paid online service. It is the exact same tool it just runs on the website and costs money per month.

- You can check it out here:

You must be registered to see the links

GPU Buying Guide:

- Buying Nvidia will be the most headache free way to generate AI art, though it is generally possible to make things work on AMD cards with some effort. This guide will not cover any steps needed to make things work on AMD GPUs, though the tools I use all claim to support AMD as well.

| On a tight budget | Used RTX 4060 TI (16gb VRAM) | This card is modern, reasonably fast, and has 16gb of VRAM |

| Middle of the road | RTX 5070 TI (16gb VRAM) | This has the 16gb of VRAM, but will be signifiicantly faster than a 4060ti |

| High VRAM on a budget | Used RTX 3090 (24gb VRAM) | If you want 24gb of VRAM to unlock higher resolutions and the possibility of video generation, the RTX 3090 is the most reasonable option |

| Maximum power | RTX 5090 (32gb VRAM) | If you have deep pockets the RTX 5090 has the most VRAM of any consumer card and is much faster than the RTX 4090 The RTX 4090 is a great card, but prices are extremely high right now. If you can find a deal, that's another good buy. |

Installation and Setup:

- The tool I will use in this tutorial is called Invoke. It has both a paid online version, and a free local version that runs on your computer. I will be using the local version, but everything in this tutorial also works in the online version.

- Website:

You must be registered to see the links

- Website:

- These steps specifically are how to install the local version. If you are using the online version, you can skip all of these steps.

- Download the latest version of Invoke from here:

You must be registered to see the links

- Run the file you downloaded. It will ask you questions about your hardware and where to install. Continue until it is installed successfully

- If you have a low VRAM GPU (8gb or less) to greatly improve speed, follow these additional steps:

You must be registered to see the links

- Click Launch

Now, you will get a window like this:

Understanding Models

Now, the most important part of AI generation: selecting a model. What is a model? I will spare you the technical details, most of which I don't understand either. Here's what you need to know about models:

- Your model determines how your image will look.

- If you get an anime model, it will generate anime images

- If you get a realism model, it will generate images that look like a real photograph

- Each model "understands" different things.

- One model might interpret the prompt "Looking at camera" as having the main character in the image make eye contact with the viewer

- A different model might interpret the prompt as having the main character literally look at a physical camera object within the scene

Your base model is the most important thing in determining how your images will look. Here are some links to some example models (note, there are thousands and thousands of models available.)

Anime Models

-

You must be registered to see the links

- This is a popular anime model.

-

You must be registered to see the links

- This is also an anime model, however it produces a different style of illustration from the other model.

-

You must be registered to see the links

- This anime model produces images in more of a '3D style'

Realism Models

-

You must be registered to see the links

- This is the most popular realism model. However, I will have a section below specifically on Flux which covers some things you will need to know before using it.

-

You must be registered to see the links

- While realism models don't technically have different 'styles' like anime does, it is important to note that different realism models produce different styles of realism. Some models might be better at creating old people. Some might produce exclusively studio photography style images. Some might produce more amateur style images of lower quality.

-

You must be registered to see the links

Generating Your First Image

Alright, with all that new knowledge in your head, I will provide a recommended model for the remainder of this tutorial.

We will use

You must be registered to see the links

which is a very popular anime model that is based on Illustrious.To download this, you will require an account on Civitai. Civitai is the primary space in which users in the AI community share models. Create an account and then continue on with this tutorial.

After you've created an account, to install this model, right-click here, and click 'Copy Link'

Now, go back to Invoke and click here:

Then, paste the link here, and click Install:

Most models are around 6gb, however Flux is around 30gb.

When it is done, you will see it here:

Now go back to the canvas by clicking here:

You will see the model has been automatically selected for you. But if you chose to install other models too, you can select the model here:

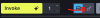

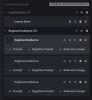

Now, enter these prompts:

- Positive Prompt

- masterpiece, best quality, highres, absurdres, hatsune miku, teal bikini, outdoors, beach, sunny, sand, ocean, sitting, straight on, umbrella, towel, feet

- Negative Prompt

- bad quality, worst quality, worst aesthetic, lowres, monochrome, greyscale, abstract, bad anatomy, bad hands, watermark

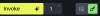

And click 'Invoke'

Congratulations You have made your first image:

Now, you can create great AI art using only what you've seen so far and you're free to stop and experiment here. However, this is only the beginning of what you can do with AI.

In part 2, I will start to get into more tools and options you have available.

Last edited: